Why AI Won’t Take 99% of Jobs

We’ve got a running joke on my team.

Any time we find a new tool that does in minutes what used to take us days, someone says, “Welp, that’s it—our jobs are over. Let’s buy some cows, move to a tiny farm, and ride off into the sunset.” We’ve said it so often that now it’s just: “Guys… cows. Cowboys.”

Funny moments—but in reality: as AI gets more powerful, the contribution of every single team member actually becomes more important, not less.

I get messages all the time from developers, designers, and other professionals who admit they’re losing sleep, terrified AI will steal their jobs. It’s a fair worry—especially with LinkedIn doom-posts, “AI gurus,” and the general panic floating around.

But here’s the truth: there isn’t a finite number of jobs, because there isn’t a finite number of problems. Solve one, and three more appear—that’s literally the history of work.

There will always be problems to solve, and we’ll always need humans to solve them.

“Wait… why? Can’t we just let AGENTS solve everything?”

This is not going to happen.

A) Because turning your business into 500 agents running your money and reputation 24/7 with zero oversight is basically asking for chaos. Would you trust that?

B) Because agents carry no accountability. A job isn’t just a stack of tasks—it’s responsibility. You put your name on the outcome. Agents can’t do that.

Let me break it down.

The Quick Take (Pin this to your brain)

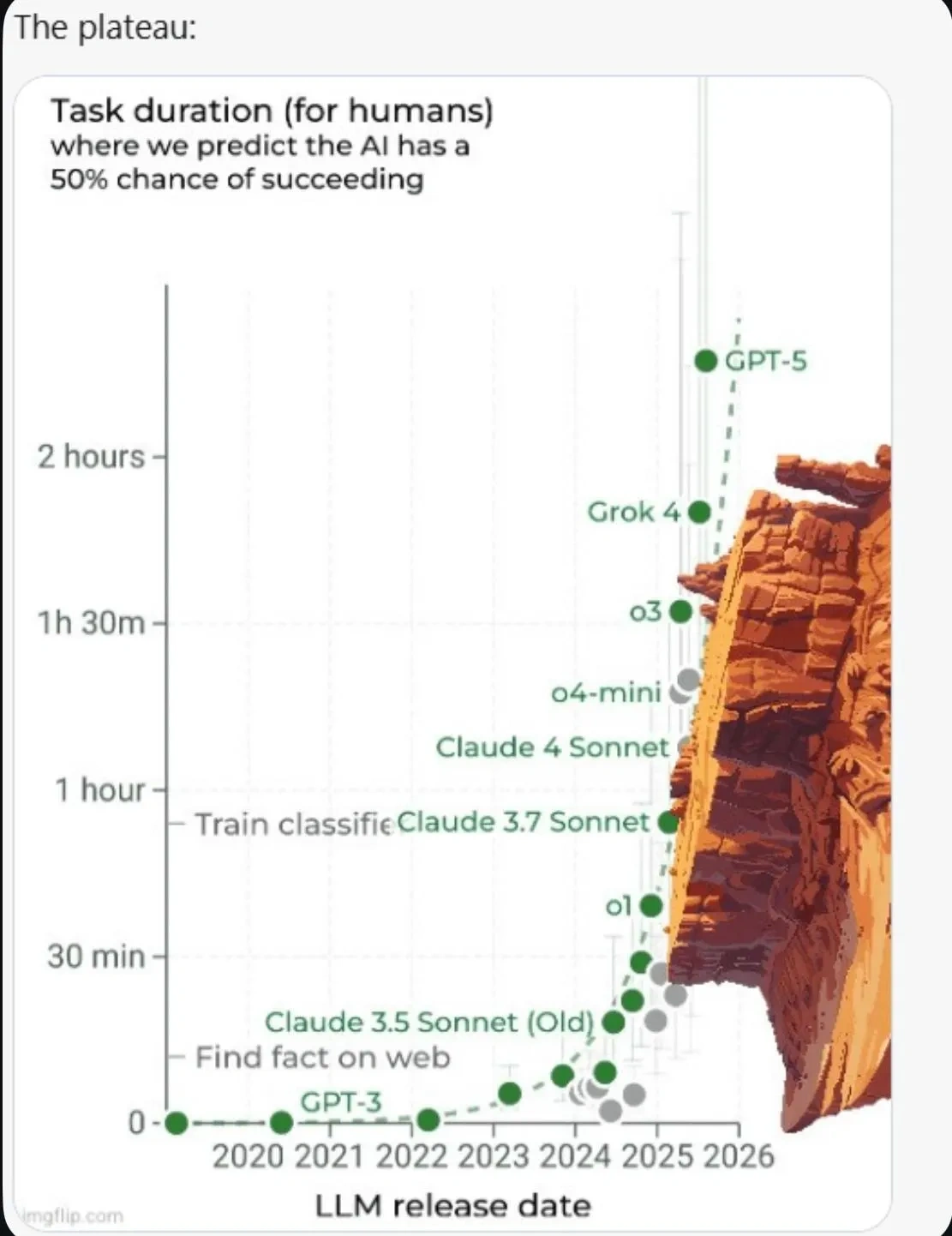

- AI ≠ Human intelligence. More data + more compute = doing longer tasks. Executing tasks that take longer for humans to do ≠ More intelligent than humans. Otherwise calculators could already be qualified as Super Intelligence!

Once you understand this, you'll see why the argument below is misleading:

- AI takes tasks, not whole jobs. Most jobs are bundles of tasks AND responsibility; AI eats the average passive worker. You need to reinvent yourself.

- Humans stay accountable. Businesses can't offload responsibility to a black box. Someone has to be on the hook. A single person $1 billion company = 1 person carrying the responsibility of 100s of processes and tasks at once—do this if you want misery and losing your mind (good luck).

- Your move: Treat AI like a power tool. Use it to ship more value, faster—and keep the judgment, context, and relationships human.

I think the big misconception is this:

AI is absolutely changing 99% of jobs as we know them today—no question. If you cling to old habits, you’ll get left behind. But that doesn’t mean the problems disappear.

You can’t keep riding a horse-drawn carriage while everyone else is in a Ferrari.

This isn’t a replacement problem—it’s an education problem. We shouldn’t rely on Universal Safety Nets (like UBI). We need Universal AI Education so people can bridge the gap and keep creating value.

First Principles: What Today’s AI Actually Is

Ok, let’s take a long breath and think of what these systems actually are.

AI today is basically a supercharged pattern machine with huge amounts of information embedded in it and great talking skills.

Think of the world's best DJ remixing words with patterns learned from humans. It’s fast, broad, sometimes brilliant—but it doesn’t understand the world the way you do.

- It doesn’t have persistent memory of real experiences.

- It doesn’t understand the physical-world as we or cats, or any animal understand it.

- It doesn’t plan long-term with values or intent.

- It predicts words.

That being said, with the technology we have today, talk of AI “consciousness,” “self-awareness,” or a genuine sense of self-interest is pure nonsense.

Could future breakthroughs break past today’s ceiling? Sure, that’s possible. But we’re not there yet—and simply following current scaling laws (“just add more compute and out pops intelligence”) won’t get us there.

Three Flaws in the “AI Takes 99% of Jobs” Idea

1) “More Data + More Compute = A Super-Intelligent Being”

The claim: Scale it big enough and you get a digital Einstein.

Reality: We don’t even have a precise, universally agreed definition of what “intelligence” is. Are we talking about IQ-style problem solving? Knowledge recall? Creativity? Social skills? Emotional awareness? Behavioral judgment? Each is different—and current AI doesn’t come close to the full package.

But let’s simplify. Forget “intelligence” for a second. The real questions are:

a) Can AI destroy us?

b) Can AI take our jobs?

a) Destruction: Why would AI even want to? For that, it would need self-interest or survival instinct. Can anyone explain how “self-interest” suddenly emerges out of more data and bigger GPUs? Spoiler: it doesn’t.

b) Jobs: Yes, AI will (and already does) take on parts of our old jobs. That’s exactly why we should use it—to free ourselves up for the new and harder problems. And if you think humanity is running out of problems, think again.

2) “AI Learned Math, So It Can Learn Anything!”

The claim: It’s acing math contests. Winning Olympiad medals. Humans are toast.

Reality: Impressive? Absolutely. A trustworthy, end-to-end reasoner? Not even close.

There’s evidence now that AI might eventually create new math or even publish research papers.

Right now, AI is useful as a step-by-step helper—producing outlines, bonds, or partial proofs you can build on. It can even churn out something that looks like a polished research paper in five minutes. But here’s the kicker: it might take you five days (or more) to check, trust, and actually own the result.

Even if AI one day matches a top mathematician—does that mean mathematicians are obsolete? Really? Of course not. It just means the best humans will tackle bigger, harder, more abstract problems. We’re not running out of problems to solve, folks.

If AI can generate and test hypotheses, fantastic. That frees me to think at higher levels of abstraction, connect fields, and attack the kinds of challenges no one could touch before. That’s exactly what happened with calculators: they didn’t kill math, they pushed humans to new frontiers.

Now, there’s a reason AI is getting pretty good at math:

A huge part of mathematics (and science/other fields in general) relies on analogy intelligence—spotting patterns, reusing proof techniques, remixing methods. That’s exactly what today’s models are built for.

Another big piece is knowledge depth. The more you’ve seen, the more connections you can make. That’s why senior professors often outpace junior students—not always because they’re smarter, but because they’ve simply seen more. AI, trained on massive corpora, naturally shines at this too.

But here’s the catch: can AI deliver true breakthroughs? That’s far less clear. If you gave an AI in 1900 all the knowledge of that era, I doubt it would have leapt to general relativity.

Einstein didn’t just remix existing ideas—he reframed the universe. That kind of leap takes more than pattern-matching; it takes perspective, intuition, and a willingness to break the frame entirely.

3) “Replace All the Humans” Breaks Accountability

Picture RoboCorp: 100% AI workforce. One human “overseer.”

Question: When things go wrong (they will), who’s accountable?

- One person cannot verify thousands of outputs daily.

- Blind trust in AI is… not a risk strategy.

- Many industries require a human decision-maker by law or by common sense.

Reality on the ground:

AI increases the need for human oversight—auditing, escalation, training data curation, exception handling, ethics reviews. Translation: new human jobs appear around the tech.

The distribution of work is not only about the distribution of tasks. It's also about the distribution of responsibility.

Real-world pattern:

Autopilot didn’t fire pilots. Spellcheck didn’t fire editors. Mapping apps didn’t fire logistics managers. Each tool shifted the job to higher-value decisions.

So… Is Your Job Safe? Here’s the Ground Truth

Yes—if you reinvent your job.

Not only are you safe, but honestly—we need you more than ever.

People who can use the machine, challenge the machine, and solve 20x more problems while taking responsibility for the outcome? That’s worth more than a thousand agents.

If you’re a passive worker, though… it’s time to level up. And this is where most people fall behind.

I’ve worked with dozens of companies running AI transformation and training programs. The pattern is always the same:

- Worker mindset → “I used to do A → B → C → D. AI doesn’t fit because it’s not perfect at B.”

- My response → “Then you’ve already lost.”

AI doesn’t fit neatly into the old workflow. You need to rethink the work entirely.

Here’s a caricature that sums up the usual pushback I hear when people resist using AI:

A Simple Playbook to Future-Proof Your Job

1) Make AI your first-draft engine.

Always start with an AI draft for repeatable tasks (emails, briefs, outlines, scripts). Then edit aggressively.

2) Build a “personal SOP library.”

Save your best prompts, style guides, QA checklists, and templates. Iterate weekly.

3) Chain tools, not just prompts.

Use AI to write code that pulls your data, visualize results, and draft insights. Keep humans on the decision gates.

4) Institute “human gates” where it counts.

Define where a person must review: legal, financial, medical, safety, brand, ethics.

5) Track lift, not hype.

Measure actual time saved and quality improved. If it’s not moving a KPI, cut it.

What This Means for You (Today, Not 10 Years From Now)

In the next 90 days:

- List your top 10 repetitive tasks. Point AI at them.

- Make one “AI-assisted” deliverable per day. Ship it. Get feedback.

- Add one human gate to your workflow where the cost of error is high.

- Create a prompt kit for your role (5–10 reusable prompts).

- Document wins. Time saved. Fewer revisions. Happier clients.

TL;DR (Tape this above your desk)

- AI takes tasks, not your job.

- Use it as a power tool. Draft, analyze, repurpose—then you decide.

- Keep humans on the hook. Responsibility doesn’t automate.

We’re about to launch the Pathfinder AI Bootcamp—an 8-week live expedition to master AI in your career using our proprietary LUMEN Framework™.

If this calmed your nerves (or lit a fire), hit reply and tell me one task you’re handing off to AI this week. I’ll send you an exclusive 60% discount for the next bootcamp.

Stay sharp,

— Charafeddine (CM)