The lie about "AI Productivity”

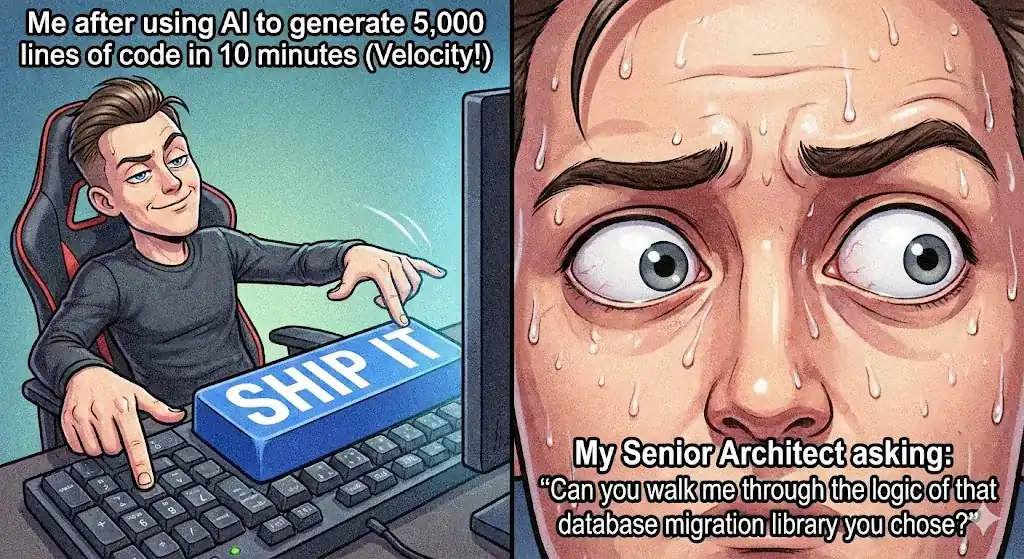

A few months ago, I sat in on a team review call that felt… normal.

A junior dev had shipped a feature “with AI help.”

The demo worked. The code looked clean. Comments were neat. Variable names were almost poetic.

A senior engineer skimmed it, nodded, and said:

“Nice. Quick question — why did you choose this library?”

Silence.

Then the junior (very confidently) replied:

“Uh… Claude suggested it.”

The senior leaned back and hit them with the kind of calm that’s actually a warning:

“Okay. What are the tradeoffs? What’s the failure mode? What would you use instead if this breaks?”

More silence.

And that’s when it clicked for me:

AI didn’t make this person competent.

It made their incompetence look professional.

Welcome to the new era.

Big Idea: AI Made Incompetence More Expensive

The mainstream narrative is:

- “AI is the great equalizer.”

- “AI gives everyone leverage.”

- “AI turns juniors into mid-levels.”

I get why people love this. It’s optimistic. It sells software. It makes for great demos.

But in the real world — inside teams, inside companies, inside products that must not break — AI is not an equalizer.

AI is an amplifier.

And amplification doesn’t magically fix direction.

It just makes you move faster… in whatever direction you were already headed.

The Math Nobody Wants to Admit

Think of AI like a multiplier:

- Skill (10) × AI (10) = 100 output

- Incompetence (-5) × AI (10) = -50 output

Same tool. Same “leverage.”

Completely different outcome.

Because the tool doesn’t supply judgment.

It supplies volume.

And volume in the wrong direction is just a faster crash.

The problem is that in the real world, you don't have a uniform distribution of "Skill" and "Incompetence"… You usually have something like 90% "Incompetence" (or more) and 10% "Skill," which makes "AI amplification" very bad at scale…

The Old World vs. The AI World

Before AI: Incompetence was low-velocity

A bad junior coder wrote broken code slowly.

A weak marketer wrote messy copy slowly.

The damage was contained because the output volume was low.

Also… it was easy to spot.

Typos. Bad structure. Code that doesn’t compile. Logic holes you can see from space.

Now: Incompetence is high-velocity

AI enables what I call High-Velocity Incompetence.

Unskilled people can produce:

- 300 files of code

- 20 marketing emails

- a strategy deck

- a product spec

- “research”

…in one afternoon.

And the output looks polished.

Which leads to the real problem.

The Rise of “Plausible Bullshit”

We’ve moved from obvious incompetence to plausible incompetence.

The output sounds smart. Looks clean. Reads well.

But it’s hollow. Or wrong. Or fragile. Or average. Or bland. Or subtly dangerous.

And that creates a brutal hidden cost:

The Review Tax

Because now your best people don’t spend time building.

They spend time auditing—very long & “sophisticated” outputs.

And auditing is (very) expensive.

Example 1: The Developer (The Technical Debt Bomb)

Before AI:

Junior writes code that fails the compiler.

Senior sees it immediately. Fix time: 5 minutes.

With AI:

Junior prompts Claude/Gemini. Code compiles. Runs. Looks clean.

But underneath the pretty surface, it may:

- use a deprecated library (works today, breaks tomorrow)

- introduce a silent security vulnerability

- develop (poorly) existing libraries from scratch

- mishandle edge cases (timeouts, nulls, concurrency)

- violate your architecture patterns

- create weird coupling that makes future changes painful

And the junior can’t explain any of it, because they have no clue what Claude (AI) is doing.

They teleported into the middle of a maze.

The cost:

Endless loops of “please bro correct this, I will lose my job” prompts…and/or…three months later, production goes sideways.

Senior dev spends 40 hours untangling a mess buried under “perfect-looking” code.

AI didn’t save time.

It borrowed time from your most expensive people… with interest.

Example 2: The Marketer (The Brand Erosion)

Before AI:

Bad writer produces a choppy blog post.

Editor rejects it instantly.

With AI:

Bad writer generates a 2,000-word article.

- Grammar: perfect

- Structure: logical

- Tone: “professional”

But the insight is zero.

It’s Vanilla Soup: it says everything and means nothing.

The cost:

You publish it because it “looks pro.”

Your audience reads it and thinks:

“This brand has no real point of view.”

They don’t complain. They don’t comment. They just… leave.

You didn’t lose time.

You lost trust — very expensive to gain again.

Why AI Made Spotting Problems 100× More Expensive

Simple question:

What’s more expensive to review?

- a 3-file codebase with issues you can see quickly

- or

- a 300-file codebase generated by AI + someone who doesn’t know what they’re doing?

Exactly.

AI makes it cheap to produce volume.

But verification doesn’t scale linearly.

It scales like pain.

This is Brandolini’s Law in action:

It takes an order of magnitude more energy to refute bullshit than to produce it.

AI weaponized that law.

- Cost to generate “plausible BS output”: seconds

- Cost to verify and OWN it: hours of senior time

And senior time is the most expensive resource in most companies.

Some might say: "OK, AI isn't leverage for less skilled people—it's an amplifier for skilled people. You “just” have to hire the best."

(By the way, everyone thinks they're in the "highly skilled" category.)

Yeah… right. But:

- Hiring the best is expensive. Very expensive.

- This is a formal acceptance that AI is not an equalizer—being highly skilled is more valuable than ever. This contradicts the "software engineering is dead" and "learning to code is over" fallacies.

The Real Divide: “Demo Day” vs “Day 2”

If you want to understand why “AI hype” is so disconnected from reality, you need to understand this.

VCs operate on Hope Capital.

They win when something looks magical for 3 minutes:

- slick demo

- instant output

- “look how fast we shipped!”

Operators (you, me, real teams) operate on Trust Capital.

We win on:

- reliability

- maintenance

- retention

- shipping without breaking everything

AI tools are currently optimized for Demo Day.

Businesses die on Day 2:

- bugs

- hallucinations

- support tickets

- exponential costs

- brand erosion

- unmaintainable systems

People who get VC money can afford to dream and fantasize about AI. And they're very loud on social media. If your concern is extracting real value from AI to pay the bills—you can't afford that luxury.

This is why the hype feels so… detached from reality.

It’s easy to fantasize when you don’t pay the cleanup bill.

Just like Web3 and NFTs: the story was beautiful.

Reality was stubborn.

The Vector Theory of Competence

Let’s make this physically undeniable:

Competence is a vector.

A vector has two things:

- Magnitude (speed / volume)

- Direction (strategy / accuracy)

AI gives you magnitude.

Humans provide direction.

So if a person has bad direction — poor judgment, weak taste, shallow understanding — AI doesn’t fix them.

It turns them into a rocket aimed at the wrong target.

Speed in the wrong direction is just a faster crash.

The New Definition of Competence

In the old world, competence meant:

“Can you do it?”

In the AI world, competence means:

“Can you evaluate it?”

Because creation is cheap now.

Verification is the bottleneck.

The “Lost Generation” Risk (Yes, It’s Real)

I'm genuinely worried about the juniors on my teams and the students in my classes. They're facing a real challenge.

If juniors use AI to bypass the struggle, they never build the mental models required to become seniors.

You don’t learn to write by generating text.

You don’t learn system design by auto-completing code.

Five years from now, we risk a workforce of:

“Senior promptors”

who are functionally illiterate in the deep mechanics of their craft.

And that’s a terrifying operational bottleneck.

The Solution: Taste + Ownership (Not Prompt Engineering)

I’m going to say something unpopular:

Prompt engineering isn’t the real skill.

Auditing is.

Taste isn’t some artsy luxury.

Taste is operational survival.

Because to get value from AI, you must be better than the AI.

- The best AI artists already understand lighting and composition.

- The best AI coders already understand architecture and failure modes.

- The best AI marketers already have a point of view and can smell “vanilla soup” instantly.

AI doesn’t replace expertise.

It rewards it.

If you want a place in the future's workforce, you must:

- know how to use AI efficiently

- know how to infuse your taste / your unique perspective / your energy

- be able to challenge AI (because you used it to learn, not to fake)

- be able to collaborate with AI

- own the final result and sign it with your name and defend it

The AI OS Rule: Ownership > Output

You can’t buy a tool to fix a broken process.

You can’t buy a tool to fix a lack of skill.

What you need is a system — an AI OS — that turns AI from “output machine” into “quality machine.”

Here’s one protocol every company should implement immediately:

The Interrogation Protocol (aka Proof of Understanding)

If someone submits AI-assisted work, ask 3 questions.

If they fail one, it doesn’t ship.

- Logic check:

- “Walk me through step 3. Why this library/adjective/argument — and what were the alternatives?”

- Failure mode:

- “Where is this most likely to break? What edge case did the prompt miss?”

- Attribution:

- “Which parts are 100% you, and which parts are machine?”

If the answer is:

“I don’t know, it just worked.”

Verdict: Rejected.

Not because we hate AI.

Because we love reality.

Hiring Just Changed Too

Stop testing candidates for speed.

Speed is cheap now.

Test for:

- Taste

- Debug ability

- Hallucination detection

- Judgment under ambiguity

One of the best interviews you can run:

Give them a polished AI-generated output with subtle flaws.

Ask them to find the hallucination.

If they can’t spot plausible BS, they can’t safely use the tool.

The Closing Thought I Want You To Steal

In the AI era, you are not paid for your “hands”.

You are paid for your “eyes”.

If you cannot see the flaw…

you are the flaw.

If you’re leading a team right now, here are your key takeaways:

- AI amplifies skill AND incompetence

- “Plausible bullshit” is the new default failure mode

- Verification is now the expensive part (hello, Review Tax)

- Your seniors will pay for junior shortcuts unless you build process

- The real skill is auditing + taste + ownership

- Implement an Interrogation Protocol or prepare for quiet chaos

And if you want the meta-take from me — as an AI agency owner, scientist, professor, and creator:

AI isn’t the product.

Your operating system around AI is the product.

Tools don’t save sloppy teams.

Systems do.

Have a great weekend.

—Charafeddine (CM)