The Friendly Developer’s Guide to CrewAI for Support Bots & Workflow Automation

Building AI-driven customer support bots and business process automation agents can feel like assembling an elite team of digital coworkers. In this guide, we’ll explore CrewAI – a popular open-source framework for orchestrating multiple AI agents – and how it can help you create collaborative support bots and automated workflows. We’ll walk through key concepts in a conversational tone (with a dash of humor), show code snippets in Python, offer tips for production deployment, and even compare CrewAI with OpenAI’s new Agent SDK based on community insights. Let’s dive in!

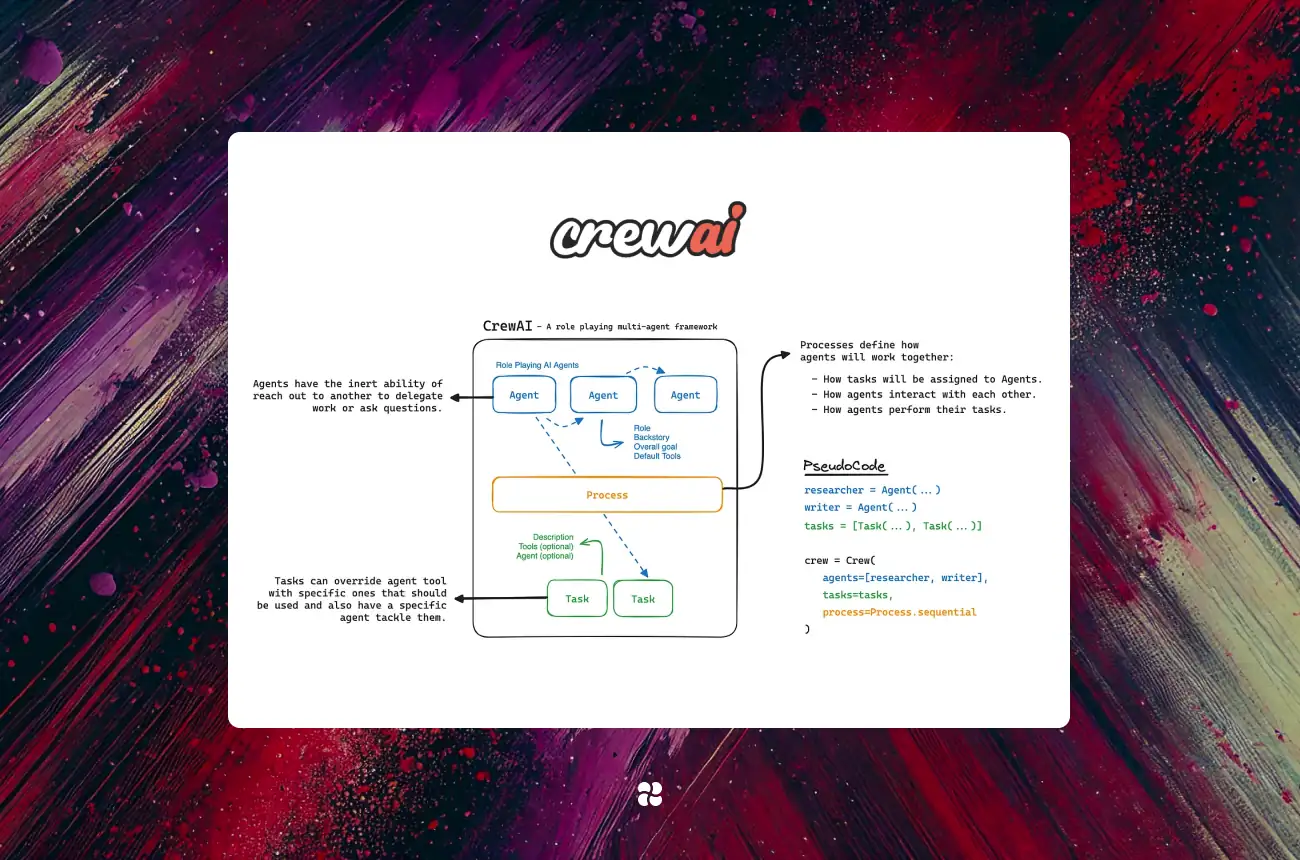

What is CrewAI?

CrewAI is a framework designed to let multiple AI agents work together as a team. Think of a “crew” of AI agents, each with specific roles and goals, collaborating to handle complex tasks autonomously. For example, in a customer support scenario, one agent could handle answering user questions while another fetches data from the knowledge base. CrewAI provides the structure (called Crews and Flows) to coordinate these agents seamlessly, so they can delegate tasks and cooperate much like humans do in a team. In essence, CrewAI empowers developers to build AI solutions that balance autonomy and control – agents have the freedom to make decisions, but you still guide the overall workflow.

Key idea: The term “crew” in CrewAI literally means a crew of agents working together. Each agent can be given a role (like “Support Representative” or “Data Analyst”), a goal (their mission), and even a backstory or persona to shape their behavior. CrewAI’s job is to orchestrate these agents through defined tasks and workflows, ensuring they collaborate effectively to achieve a bigger objective.

CrewAI’s Two Core Concepts: Crews & Flows

CrewAI provides two main abstractions to structure your AI system:

- Crews: A Crew is a set of AI agents with autonomy and the ability to interact with each other. You can imagine a Crew as a small team or department. Each agent in a crew has a role and a goal, and possibly tools or knowledge they can use. Agents in a crew communicate and delegate tasks among themselves naturally (if allowed). CrewAI’s role-playing design makes this collaboration feel intuitive – it’s like assigning duties to team members based on their job titles. For example, an “Invoice Processor” agent might delegate a calculation task to a “Finance Checker” agent if needed, and so on. Agents can even delegate tasks to each other when appropriate (this is configurable), just as a human team member might ask a colleague for help. The crew provides autonomy – once you define the roles and goals, the agents can decide how to achieve them together.

- Flows: A Flow is an event-driven workflow that gives you fine-grained control over the sequence of tasks. If a Crew is about agent autonomy, a Flow is about structured orchestration. Flows let you define steps and decision logic: you can run tasks in sequence or in parallel, branch based on conditions, handle errors, etc., much like a traditional workflow engine. In CrewAI, flows and crews are complementary – you might use a Flow to coordinate when different Crews run or to enforce business rules, while within each step a Crew of agents can operate autonomously. This combination means you get the best of both worlds: flexible AI decision-making and predictable control flow.

In simple terms: If building a support bot, you might have a Crew of agents (like “SupportAgent” and “KnowledgeAgent”) handling the conversation, and use a Flow to ensure the conversation follows a certain structure (first greet the user, then answer, then follow-up). The Crew handles the how (agents figuring out the answer), while the Flow ensures the when/if (e.g., if the answer confidence is low, maybe escalate to a human). CrewAI was designed to make this orchestration natural, especially for multi-step business processes where some steps benefit from AI autonomy and others need strict logic.

Setting Up CrewAI

Getting started with CrewAI is straightforward. It’s a Python framework (Python 3.10–3.12 supported) available via pip. You can install it in your environment with a single command:

pip install crewaiThis installs the core CrewAI package. For extra functionality like certain tools, you can install optional extras: e.g. pip install 'crewai[tools]' to include additional agent tools (web search, etc.). After installing, you’re ready to create your first multi-agent project.

CrewAI provides a CLI to scaffold a project. For example, you can run crewai create crew my_project to generate a sample project structure with config files for agents and tasks. This will set up some YAML config files (e.g. agents.yaml and tasks.yaml) and Python modules for you to customize. The YAML files let you define agent roles/goals and task details declaratively, but you can also define them purely in Python code (we’ll show an example soon).

Before running any agents, make sure to configure your AI model credentials. By default, CrewAI will use the OpenAI API for language model calls (you can change this to other providers or local models later). So you’ll need an OpenAI API key set in the environment, e.g.:

export OPENAI_API_KEY="sk-..." # your OpenAI API KeyCrewAI also often uses a search tool for agents (Serper.dev in examples), so if your tasks involve web search, set the Serper API key as well:

export SERPER_API_KEY="your-serper-key"With installation and keys done, you can run your agent crew. If you used the project scaffold, navigate into your project directory and simply execute:

crewai runThis will run the workflow as defined (alternatively, run python src/my_project/main.py, which typically invokes your Crew) You should start seeing your agents spring into action in the console, collaborating to produce an output (for example, a final answer or a report file). 🎉

Troubleshooting tip: If you run into any dependency issues (e.g. a tiktoken error when installing), CrewAI’s docs suggest installing missing pieces via extras (e.g. pip install 'crewai[embeddings]' if you need embedding support). Ensure you have a Rust compiler if needed for some packages, and upgrade pip if you encounter wheel build problems.

Now that we’ve set up, let’s build something fun and useful!

Building a Customer Support Bot with CrewAI

Let’s create a simple customer support bot using CrewAI. Imagine we want to automate answering customer questions. We’ll assemble a crew of two agents for this task:

- SupportAssistant – the friendly agent that interacts with the customer and formulates answers.

- KnowledgeGuru – a behind-the-scenes agent that fetches relevant information from a knowledge base or documentation.

We’ll have two tasks in our workflow:

- Fetch Information: The KnowledgeGuru agent will search the knowledge base for the answer.

- Answer User: The SupportAssistant agent will compose a helpful answer using that info.

This is a classic workflow where one agent gathers data and another uses it to respond. We’ll define these agents and tasks in code and then run the crew. Here’s how it looks in Python:

from crewai import Agent, Task, Crew, Process

# Define our agents with roles and goals

support_assistant = Agent(

role="Customer Support Agent",

goal="Provide accurate and friendly answers to customer queries",

backstory="You are a virtual support rep who always puts the customer first."

)

knowledge_guru = Agent(

role="Knowledge Base Expert",

goal="Find and supply relevant information from company documentation",

backstory="You have encyclopedic knowledge of the product and internal docs."

)

# Define tasks and assign to agents

fetch_info = Task(

description="Find information related to the user's question.",

expected_output="Relevant facts or steps from the knowledge base",

agent=knowledge_guru

)

answer_user = Task(

description="Give the user a helpful answer using the information found.",

expected_output="A well-formed answer addressing the user's query",

agent=support_assistant

)

# Create a crew of the two agents with a sequential process (fetch then answer)

support_crew = Crew(

agents=[support_assistant, knowledge_guru],

tasks=[fetch_info, answer_user],

process=Process.sequential

)

# Run the crew with an example user question

result = support_crew.kickoff(inputs={"user_question": "How do I reset my password?"})

print(result.final_output) # The final answer drafted by the SupportAssistantLet’s break down what’s happening in this code:

- We instantiate two

Agentobjects. Each agent is given aroleandgoal(plus an optionalbackstoryto further guide its persona) – this is how CrewAI imbues agents with distinct identities and objectives. Our SupportAssistant knows it should be friendly and helpful, while the KnowledgeGuru knows it must dig up info. - We then create two

Taskobjects. ATaskin CrewAI describes a unit of work (with a description of what to do and what output is expected) and assigns it to an agent. In our case, the fetch_info task is assigned to the knowledge guru agent, and the answer_user task is assigned to the support assistant. By specifyingprocess=Process.sequentialin the crew, we ensure the tasks run one after the other in order (first fetch info, then answer). - We bundle the agents and tasks into a

Crew. The Crew is our orchestrator: it knows which agents and tasks are involved and how to run them (sequentially, in this example). If we wanted the agents to possibly work in parallel on different tasks, we could useProcess.parallelor even a hierarchical process (where a manager agent plans the steps) – but for a simple Q&A flow, sequential is fine. - Finally, we call

kickoffon the crew, providing the user’s question as input. Under the hood, CrewAI will inject thisuser_questioninto the task context (likely populating any{user_question}variables in the task descriptions or agents’ context). The KnowledgeGuru agent will receive the question and fetch info, then pass its findings to the SupportAssistant agent to produce a final answer. Theresult.final_outputwould contain the SupportAssistant’s answer.

If everything is set up (and your OpenAI API key is valid), this should result in a printed answer to the user’s question. In a real app, you’d send that back to the user through your chat interface or API.

Note: For brevity, the example above does not show integration with actual knowledge base data – our KnowledgeGuru agent could be enhanced with a tool to query documentation (CrewAI supports various tools, e.g. a web search tool). In CrewAI, you can attach tools to agents easily. For instance, if we had an API or search function, we could include something like tools=[SerperDevTool()] when creating an agent to give it internet search capability. CrewAI’s flexibility with tools means you can plug in custom functions or connectors for your business data.

Our support bot example is simple but demonstrates the core idea: multiple specialized agents can cooperate to handle a support request. You can extend this pattern with more agents or tasks. For example, add a “SentimentWatcher” agent that checks the user’s tone and alerts a human operator if the user is unhappy, or a “UpsellAgent” that looks for opportunities to recommend relevant products after resolving an issue (just kidding… unless? 🤖💼).

Automating Business Processes with CrewAI

Beyond support bots, CrewAI shines in orchestrating complex business processes. You can think of each agent as a department specialist and the flow as the standard operating procedure. Let’s consider a couple of scenarios:

- Order Fulfillment Workflow: Imagine processing an e-commerce order. You could have an OrderBot agent that takes an order, a InventoryAgent that checks stock, a PaymentAgent that handles the charge, and a ShippingAgent that books the shipment. Using a CrewAI Flow, you can define steps: if stock is available, then charge payment; if payment succeeds, then schedule shipping; if any step fails, notify a human. Each agent autonomously handles its piece (e.g., InventoryAgent might query a database via a tool), and the flow ensures it all happens in the right order. CrewAI’s event-driven flows allow conditional branching – for example, if inventory is low, the flow could trigger an alternate path to restock or cancel the order.

- Employee Onboarding Process: Consider onboarding a new employee, which involves multiple departments. A WelcomeAgent could greet the new hire and collect info, an ITAgent could create accounts and provision hardware, an HRAgent could handle paperwork. These agents can be orchestrated so that once the WelcomeAgent finishes gathering data, the ITAgent and HRAgent work in parallel on their tasks. You could even have a ManagerAgent overseeing the process (using CrewAI’s hierarchical process mode, which automatically appoints a manager agent to coordinate tasks when using

Process.hierarchical). This manager agent would plan tasks, delegate to IT and HR agents, and verify completion – much like a human manager would coordinate different teams. - Financial Report Generation: This resembles one of CrewAI’s own examples. Suppose you need to generate a detailed quarterly report. You might set up a DataGatherer agent to collect relevant financial metrics, a AnalystAgent to interpret the data, and a ReportWriter agent to compose the report. The flow might be: DataGatherer and Analyst work in parallel (or sequentially, depending on dependencies), then ReportWriter waits until data is ready to draft the report. CrewAI can even output the final report to a file as part of a task (as shown in CrewAI’s example, where they specify an

output_filefor a task to save results).

These examples illustrate how CrewAI’s model of agents + tasks + flows can map onto real business workflows. Developers can easily extend these patterns: just add agents for new responsibilities or create alternative flows for edge cases. The key benefit is modularity – each agent is like a microservice (with a personality!) that you can plug into different workflows.

Running & Deploying CrewAI in Production

Once you’ve built your CrewAI-powered bot or workflow, how do you deploy it? The good news is that CrewAI is just Python code, so you have lots of flexibility in production:

- As a Script or Service: You can run CrewAI crews as standalone scripts (as we did with

crewai runor via a main function). For a production service (say, a chatbot API), you might wrap the crew execution in a web server. For example, use FastAPI or Flask to expose an endpoint that accepts a support question and returns the CrewAI-generated answer. Inside the endpoint handler, you’d call something likeanswer = support_crew.kickoff(inputs={...})and return the result. CrewAI’s lightweight nature (not tied to heavy web frameworks) means you can integrate it anywhere in your Python backend. - Containerization: It’s often convenient to containerize your CrewAI application. Because it’s Python-based, you can use a standard Docker Python image, install

crewaiand your dependencies, and run your service. Ensure you pass in necessary environment variables (like your API keys for LLMs or tools) into the container. Also, manage your API keys carefully – in production, use a secure way to inject them (Docker secrets, cloud key management, etc.) rather than hardcoding. - Scaling and Concurrency: If you expect high load (e.g. many simultaneous support queries), you can run multiple instances of your CrewAI service or utilize asynchronous execution. CrewAI supports asynchronous task execution and even parallel agent processes if configured. For instance, you could set up the crew’s process to parallel if tasks don’t depend on each other, or simply launch multiple crews in separate threads/processes to handle separate requests. Just be mindful of your rate limits on the LLM API side – you might need to add throttling or queueing if using OpenAI’s API heavily.

- Monitoring and Logging: In production, you’ll want insight into what your agents are doing. CrewAI outputs logs to console by default (especially with

verbose=Trueon agents or crew), which you should capture. You can also utilize CrewAI’s support for event listeners and state to monitor progress. For example, CrewAI flows maintain astateobject that can store intermediate data and outcomes of tasks. You can inspect that state after a run to see things like confidence scores or recommendations made by agents. If something goes wrong, CrewAI will throw Python exceptions, so ensure you handle those in your production code (perhaps sending an alert or fallback to a human operator if an agent fails). - Using Different AI Models: By default, CrewAI will call OpenAI’s GPT models via the API (which is why we needed the OpenAI API key). In production, you might prefer a different model (for cost or privacy reasons). CrewAI is model-agnostic – it can integrate with any LLM that follows the same interface. The docs mention you can use local models (like via Ollama for local inference) by configuring the connection. You could also use Azure OpenAI or other providers by setting the appropriate environment variables or configuration in your agents. This flexibility lets you deploy CrewAI even in environments where internet access is restricted, by hosting a local model.

- CrewAI Cloud (Optional): The team behind CrewAI offers a cloud platform as well (with a free trial). This is more of a managed solution where you could deploy your agents on their infrastructure. If you’re an enterprise AI VP or dev who doesn’t want to manage infrastructure, this could be an option – but since CrewAI open source is easy to run, many will just self-host. Still, it’s good to know there’s a cloud service and community forum if you need additional support or features (the website touts that CrewAI is trusted by industry leaders, meaning it’s gaining traction).

One thing to keep in mind: CrewAI is evolving. Always refer to the official docs for up-to-date deployment and performance tips. The community is quite active (over 100k developers, according to CrewAI’s GitHub), so you’re not alone – if you hit strange issues in production, chances are someone on the forums or GitHub discussions can help.

Now that we’ve covered CrewAI in depth, let’s address the elephant in the room: how does CrewAI compare to the OpenAI Agent SDK (and which should you choose for your project)?

CrewAI vs OpenAI Agent SDK: Community Insights

It’s 2025, and the AI agent frameworks landscape is bustling. OpenAI recently introduced its own “Agent SDK” to help developers build agentic applications. If you’re wondering how CrewAI stacks up against OpenAI’s Agent SDK, you’re in good company – developers have been comparing notes on Reddit and blogs. Let’s break down the key differences, strengths, and weaknesses, incorporating what the community is saying:

1. Learning Curve and Ease of Use:

CrewAI is often praised for its intuitive role-based approach. Developers like how you can think in terms of a “crew” of agents with roles/goals – it “naturally fits team-based workflows”. The documentation is considered well-structured and beginner-friendly, with plenty of examples. However, some note that you do need to grasp the concepts of tools and roles to use it effectively (it’s not quite plug-and-play, but second easiest among peers).

OpenAI’s Agent SDK, on the other hand, is described as lightweight with minimal abstractions – essentially a lean toolkit. It has a very low learning curve for basic use (the “hello world” is just a few lines to create an agent and run it). Community consensus is that Agent SDK feels “quick to learn” and is quite straightforward for those already familiar with OpenAI’s API. One could say OpenAI’s SDK is simple by design, whereas CrewAI is simple by metaphor (leveraging the crew/team mental model).

2. Multi-Agent Collaboration:

If your goal is to have multiple agents interacting, CrewAI was built for this from the ground up. It supports complex multi-agent setups out-of-the-box, with mechanisms for inter-agent communication, delegation, and coordinated task management. As one blog noted, CrewAI “uses an intuitive ‘crew’ metaphor, making agent communication natural” and “orchestration is role-based, with task management for parallel execution”. This makes CrewAI excellent for modeling scenarios with many specialized agents (like our support team or business process examples).

The OpenAI Agent SDK does support multiple agents, but in a more manual way. It provides primitives like handoffs (so agents can pass context or tasks to each other) and you can orchestrate flows, but it doesn’t enforce a particular structure like CrewAI’s crews. In other words, Agent SDK gives you the Lego pieces (agents, tools, results, etc.), and you can assemble a multi-agent system, but you’ll write more custom logic to coordinate agents. CrewAI gives you a pre-built “crew” structure to slot agents into, which can be quicker if that structure fits your needs. If you only need a single agent or very simple chaining, OpenAI’s SDK might be sufficient. But if you want a buzzy hive of agents working together with minimal fuss, CrewAI shines.

3. Flexibility and Customization:

This is a double-edged sword. CrewAI’s opinionated design (roles, tasks, flows) makes it cohesive but can sometimes feel less flexible. A Reddit user commented, “CrewAI I liked but [it] lacks flexibility”, especially if you try to do something outside its intended patterns. For instance, if you want a very unconventional agent interaction pattern, you might fight against CrewAI’s abstractions. OpenAI’s Agent SDK, being lower-level, is very flexible – you can craft any workflow, since it’s basically a toolkit for agents and you orchestrate via code. The Agent SDK is described as “flexible with customizable agents, tools, and workflows” without imposing a specific architecture. That said, with flexibility comes responsibility: you have to manage the complexity (state, sequence, etc.) yourself more when using Agent SDK.

In terms of integration, both frameworks allow using custom tools and connecting to various models. CrewAI is Python-only (for now) and integrates well with the Python ecosystem (and even with LangChain tools if needed). OpenAI’s Agent SDK is also Python-focused (with some aspects like Model Context Protocol (MCP) aimed at broader use), and it’s designed to be model-agnostic too (via things like LiteLLM support to plug in any model). Neither framework locks you into a single AI model, though OpenAI’s SDK, unsurprisingly, feels tailored for OpenAI’s models and platform.

4. Production Readiness and Tooling:

OpenAI’s Agent SDK is marketed as production-ready from the start. It includes built-in tracing and debugging tools, which allow you to visualize agent reasoning steps and monitor performance. It also has guardrails for safety (you can validate inputs/outputs to keep agents from going rogue). These features indicate that OpenAI expects you to deploy their SDK in real-world applications and feel confident about reliability. Community members highlight the strong tracing/debugging as a big plus, essentially “baked in via a one-line command” to enable it. If you need to closely track agent decisions or meet compliance logging requirements, the Agent SDK has an edge.

CrewAI, while used in production by some, is still maturing. It’s described as suitable for production for “crew-based workflows” but being relatively new, it might not have all the bells and whistles yet. For example, CrewAI’s approach to state management is basic – it passes outputs from one task to the next, and you can maintain a shared state, but it doesn’t (as of now) have an elaborate logging or debugging UI out-of-the-box. Some developers mention that CrewAI can throw a lot of warnings in console (a minor gripe), and others caution that it’s not the top pick if “going for production” stability is your #1 concern. That said, CrewAI does emphasize reliability and consistent results in design, and it has a thriving community to support you if issues arise. It’s also entirely open source (MIT licensed), which means you can inspect or modify the internals if needed – a plus for some engineering teams.

5. Ideal Use Cases – When to choose which?

Ultimately, choosing between CrewAI and OpenAI’s Agent SDK comes down to what you need:

- CrewAI is ideal if you want the most intuitive multi-agent system with minimal effort in defining how agents cooperate. If your problem naturally breaks down into roles/goals (like a support team, or a business process with distinct steps handled by different experts), CrewAI will feel like a comfy pair of slippers. You describe who the agents are and what they need to do, and CrewAI helps orchestrate the rest. It’s great for prototypes where you want quick results and for scenarios that resemble human-like teamwork. Just be aware that you may need to adapt to its structure (i.e., it might be less straightforward to implement highly custom flows not anticipated by the framework). Community feedback suggests CrewAI is a top choice for those who “prefer thinking in terms of roles, goals, and tasks”, and for modeling human team structures in AI. If you’re not immediately targeting mission-critical production deployment, or you’re willing to iterate with the community as the framework evolves, CrewAI offers a very developer-friendly experience.

- OpenAI Agent SDK is a strong choice if you prioritize simplicity, stability, and integration with OpenAI’s ecosystem. It’s basically an extension of the OpenAI API philosophy – keep it simple and let developers build on it. If your use case is more about tool use and function calling (e.g., you want an agent that can use a calculator or search engine) with maybe one or two agents, the Agent SDK will serve you well. It’s also currently the go-to if you need robust debugging/tracing or are planning to use OpenAI’s model evaluation and fine-tuning workflows alongside your agent (since it “plays nice” with those out of the box). And of course, if you are deploying in an enterprise setting that demands proven reliability, you might lean towards the OpenAI SDK given it’s directly backed by OpenAI (and likely to be updated with first-party support). One community member’s “key takeaways”: choose OpenAI Agent SDK if you want a “simple, production-ready framework with minimal learning curve… already using OpenAI models and want tight integration”.

It’s worth noting that both frameworks can potentially solve the same problems – and they’re not the only ones out there (LangChain, LangGraph, AutoGen, etc., are other names you’ll encounter). In fact, some developers mix and match (using tools from one in another, for example). There’s no one-size-fits-all, but the community consensus as of early 2025 seems to be: CrewAI for multi-agent collaboration ease, OpenAI SDK for lean production deployments. Or in a witty Reddit summary: CrewAI is like a well-organized team ready to go, while Agent SDK is a box of Lego that lets you build whatever agent system you need – choose based on whether you want guided structure or ultimate flexibility. 😉

Conclusion

Both CrewAI and OpenAI’s Agent SDK open exciting avenues for building AI-driven support bots and automation agents. In this guide, we took a deep dive into CrewAI – from setting it up, understanding its crew & flow paradigm, to building a sample support bot and envisioning business process automations. CrewAI’s friendly abstractions make it feel like you’re managing a team of AI colleagues, which can be both powerful and fun (who wouldn’t want to boss around a few robots?). We also compared CrewAI with the Agent SDK to help you decide which might suit your project best, drawing on community experiences and feedback for an honest perspective.

As an AI VP or developer, you’re at the frontier of blending human-like collaboration with machine efficiency. Whether you pick CrewAI, OpenAI’s SDK, or even a combination, the key is to experiment and iterate. Start with small prototypes – maybe a mini support bot that answers a few FAQs – and gradually increase the complexity of your agent crew. Leverage the community forums (CrewAI’s community and the r/AI_Agents subreddit are great resources) to learn from others’ successes and pitfalls. And don’t be afraid to inject some personality into your agents; a little humor and empathy in your bot’s responses (courtesy of a well-crafted agent persona) can go a long way in making automation feel welcoming to users.

Happy building!

Cohorte Team

May 7, 2025