Designing Graph-Native AI Workflows with Microsoft Agent Framework

A practical, code-level guide to building, debugging, and shipping graph-based agents and workflows the way Microsoft actually intended.

We’ve all done it.

You start with a simple AI “agent”, then product asks for tools, long-running steps, retries, approvals, parallel calls to other services… and suddenly you’re staring at a 500-line state machine made of if, else and TODO comments.

Microsoft Agent Framework exists to kill that monster.

It gives us:

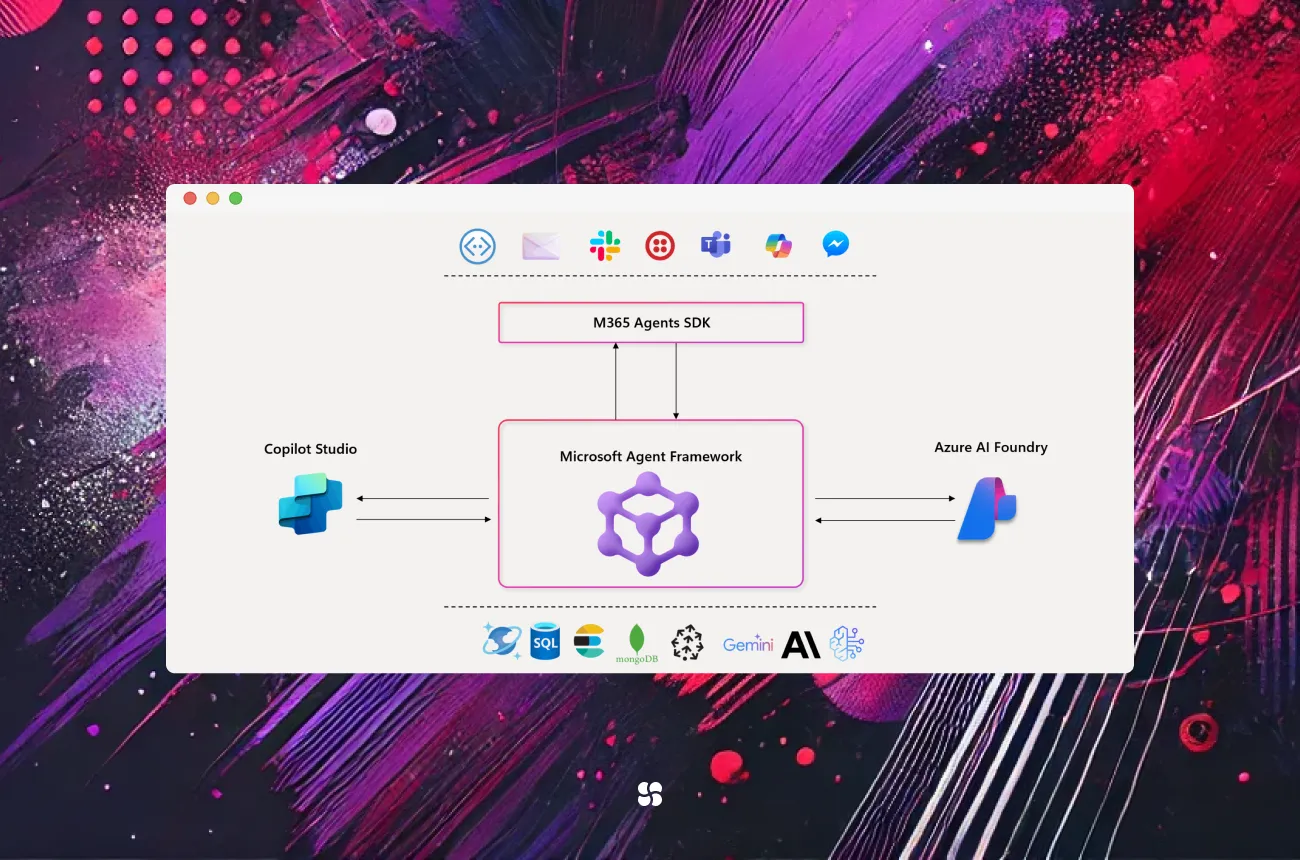

- A graph-native runtime (Workflows) for orchestrating agents and tools

- A clean Agent abstraction on top (including “Workflows as Agents”)

- First-class support for Azure OpenAI, OpenAI, and Azure AI Foundry via the OpenAI-compatible Responses/Chat APIs

In this guide, we’ll walk through how to use it the way the official SDK is shaped today — no invented APIs, no outdated namespaces — and we’ll highlight the subtle bits that can burn you: threading, streaming, secrets, and how workflows really behave at scale.

Table of contents

- Why Agent Framework (and why graphs)?

- Mental model: Agents, Workflows, and “Workflows as Agents”

- Getting started with a Python agent (Responses API)

- Modeling a graph-based workflow

- Turning workflows into agents (and streaming results)

- Concurrency, scaling, and “don’t do this in production”

- Observability: events, logs, and debugging stuck flows

- Comparing Agent Framework with LangGraph, LangChain, and Semantic Kernel

- Implementation checklist & gotchas

- Where we’d take this next

We’ll write in “we” form — think of this as the combined notes of a team that already made the mistakes so you don’t have to.

1. Why Agent Framework, and why graphs?

If we strip the hype away, an “AI agent” is just:

- An LLM (or set of LLM calls)

- A bunch of tools / APIs

- A control flow for when and how to use them

- Some state and memory around that

Most frameworks nail (1) and (2). The graveyard is (3) and (4).

Microsoft Agent Framework’s bet is:

Make the control flow explicit and graph-shaped, not hidden in prompts or tangled if/else logic.

That’s what Workflows bring: a graph of orchestrations (sequential, parallel, routers, loops) and tasks, with events and checkpoints baked in. You then wrap that graph as an Agent so you can talk to it the same way you talk to a “plain” LLM agent.

If you’ve used LangGraph, the concept will feel familiar: Agent Framework is Microsoft’s official, supported stack for this style of development.

2. Mental model: Agents, Workflows, and “Workflows as Agents”

At a high level:

- Agent

A thing you send messages to (run/run_stream) and get structured responses + events back. It can call tools, hit models, keep short-term context, etc. - Workflow

A graph that coordinates multiple tasks/agents over time: fan-out / fan-in, retries, human approvals, long-running operations, etc. Workflows operate on events and state, not just “prompt in / text out”. - Workflows as Agents

A workflow instance that is wrapped as an Agent so you can just callworkflow_agent.run(...)instead of manually pushing events into the workflow engine.

So the pattern looks like:

App → Agent → (maybe) Workflow as Agent → LLMs/Tools/Backends

We’ll wire this up in Python and C#.

3. Getting started with a Python agent (Responses API)

We’ll start with the Azure OpenAI Responses agent, because that’s the first-class path in the docs today.

3.1 Install the right packages

We keep versions loose (previews move fast):

pip install "agent-framework-azure-ai" "azure-identity"We deliberately don’t pin preview build numbers (1.0.0-preview.25xxxx.x) in blog code — they go stale quickly. Stick to “latest” unless you have a reproducing repo.

3.2 A minimal “assistant” agent in Python

import os

import asyncio

from agent_framework.azure import AzureOpenAIResponsesClient

from azure.identity import AzureCliCredential

async def main():

# Use Azure AD instead of hardcoding keys where possible

client = AzureOpenAIResponsesClient(

endpoint=os.environ["AZURE_OPENAI_ENDPOINT"],

credential=AzureCliCredential(),

)

# This creates an Agent backed by the Azure OpenAI Responses API

agent = await client.create_agent(

model="gpt-4o-mini",

name="support-assistant",

instructions=(

"You are a concise support assistant. "

"Ask clarifying questions before giving long answers."

),

)

# Single-shot, non-streaming call

result = await agent.run("Hey, I can't log into my account.")

print("Response:", result.output_text)

# Streaming variant (Python: run_stream)

async for update in agent.run_stream("Give me a bullet list of steps."):

# Updates include partial output, tool calls, events, etc.

if update.output_text_delta:

print(update.output_text_delta, end="", flush=True)

if __name__ == "__main__":

asyncio.run(main())Key details that are actually in the current SDK:

- The Python client type is

AzureOpenAIResponsesClientinagent_framework.azure. - You create agents with

await client.create_agent(...). - Non-streaming:

await agent.run(...). - Streaming in Python:

agent.run_stream(...)(notrunStreaming, notrun_streaming()).

If you accidentally mix C# naming into Python (like RunStreamingAsync), your IDE will glare at you and you’ll know why.

4. Modeling a graph-based workflow

Now let’s stop pretending a single LLM call is enough.

Say we’re building an internal “incident co-pilot” for our platform team:

- Ingest a rough incident description.

- Classify severity + impacted surface area.

- Look up runbooks / dashboards.

- Draft a mitigation plan.

- (Optionally) page on-call or schedule a follow-up task.

That’s a graph:

- One branch for analysis

- One for retrieval

- One combining both into a plan

- Maybe a router deciding if we escalate

The Workflows API lets us model this explicitly. Exact syntax depends on the version you’re on, but conceptually:

from agent_framework.workflows import Workflow, Sequential, Parallel, Task

# Pseudo-code, but shaped like the real Workflow APIs:

analyze_task = Task(

name="analyze-incident",

handler="handlers.analyze_incident", # Your Python function

)

retrieve_task = Task(

name="retrieve-runbooks",

handler="handlers.retrieve_runbooks",

)

plan_task = Task(

name="draft-plan",

handler="handlers.draft_plan",

)

workflow = Workflow(

name="incident-workflow",

orchestration=Sequential(

steps=[

Parallel(steps=[analyze_task, retrieve_task]),

plan_task,

]

),

)

We’re not inventing magical “graph builders” here — we’re just reflecting the real building blocks the docs talk about: orchestrations (Sequential/Parallel/Router/Loop) and tasks, wired into a workflow.

In practice, you’ll usually define workflows either in code (as above) or via a declarative JSON/YAML form that the Microsoft.Agents.AI.Workflows.Declarative package understands on the .NET side.

5. Turning workflows into agents (and streaming results)

This is the fun bit: “Workflows as Agents.”

We don’t want our app to know about orchestrations and checkpoints. We want to say:

“Here’s a long incident prompt, please handle the flow and tell us what you did.”

5.1 Python: workflow.as_agent(...).run_streaming(...)

The official pattern (and the one we’ll stick with) looks like this in Python:

from agent_framework.workflows import Workflow

# assume 'workflow' defined like above

workflow_agent = workflow.as_agent(name="incident-co-pilot")

async def run_incident_flow(description: str):

# In workflows context, you’ll often see run_streaming for richer updates

async for update in workflow_agent.run_streaming(

{"incident_description": description}

):

# You can inspect update events, step outputs, etc.

if update.output_text_delta:

print(update.output_text_delta, end="", flush=True)

Note the casing:

- Python:

workflow.as_agent(...),workflow_agent.run_streaming(...). - C#:

workflow.AsAgent(...),workflowAgent.RunStreamingAsync(...).

Mixing those is a classic “stare at compiler error for 15 minutes” bug.

5.2 .NET: Workflow.AsAgent().RunStreamingAsync()

On the .NET side, with the current NuGet packages:

using System;

using System.Threading.Tasks;

using Azure.Identity;

using Microsoft.Agents.AI.AzureOpenAI;

using Microsoft.Agents.AI.Workflows; // workflows-related namespaces

public class Program

{

public static async Task Main()

{

var endpoint = new Uri(Environment.GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT")!);

var credential = new AzureCliCredential();

var client = new AzureOpenAIResponsesClient(endpoint, credential);

// Load or construct your workflow here (declarative or code)

Workflow incidentWorkflow = BuildIncidentWorkflow(); // your method

var agent = incidentWorkflow.AsAgent(name: "incident-co-pilot");

await foreach (var update in agent.RunStreamingAsync(new

{

incident_description = "Service X is timing out in region West Europe"

}))

{

if (!string.IsNullOrEmpty(update.OutputTextDelta))

{

Console.Write(update.OutputTextDelta);

}

}

}

}

Note the package/namespace:

Microsoft.Agents.AI.AzureOpenAI(with the s inAgents) is current; olderMicrosoft.Agent.*names are pre-framework and should be avoided in fresh code.

6. Concurrency, scaling, and “don’t do this in production”

This is where a lot of teams quietly trip.

There’s an important GitHub thread where the Agent Framework maintainers clarify the threading guarantees around workflows and executors. Summary:

- A Workflow instance is not thread-safe.

You must not run multiple executions concurrently on the same instance. - A Workflow executor instance is not thread-safe either.

Don’t share one executor across many workflows in parallel. - However, multiple executors inside a workflow can run concurrently (e.g., in parallel orchestrations), so any shared/global state they touch must be synchronized and/or externalized.

So in code terms, please don’t do this:

// ❌ anti-pattern: global workflow/agent as singleton across requests

public static Workflow GlobalWorkflow = BuildWorkflow();

// Called by many concurrent requests:

public async Task HandleRequestAsync(string input)

{

var agent = GlobalWorkflow.AsAgent(name: "shared");

await foreach (var update in agent.RunStreamingAsync(input))

{

// ...

}

}

Instead:

- Treat workflows/agents as scoped per execution (or per logical “session”), or

- Use a host / orchestrator that manages instances and lifecycles for you.

A safer pattern:

public async Task HandleRequestAsync(string input)

{

var workflow = BuildWorkflow(); // new instance per request

var agent = workflow.AsAgent(name: "per-request");

await foreach (var update in agent.RunStreamingAsync(input))

{

// ...

}

}

You can absolutely scale out by running many workflow instances concurrently; just keep the instance itself off the global shelf.

7. Observability: events, logs, and debugging stuck flows

Agent Framework leans heavily on events for observability:

- Workflow started / completed / failed

- Task started / completed

- Checkpoints written / resumed

- Tool calls, etc.

The recommended pattern is:

- Use the events API and your existing logging/metrics stack (Serilog, Application Insights, OpenTelemetry, etc.).

- Optionally, build thin adapters that map workflow events → traces/spans.

Example (pseudo-Python):

async for update in workflow_agent.run_streaming(payload):

for evt in update.events:

logger.info(

"WorkflowEvent",

extra={

"workflow_name": evt.workflow_name,

"step": evt.step_name,

"status": evt.status,

"timestamp": evt.timestamp,

},

)

if update.output_text_delta:

print(update.output_text_delta, end="", flush=True)

What the framework doesn’t do out of the box (as of now):

- Automatically wire itself to Datadog/New Relic/etc.

- Provide a magic “one click observability vendor integration”.

You’re expected to plug it into your existing telemetry story, using the events as the source of truth.

8. Comparing Agent Framework with LangGraph, LangChain, and Semantic Kernel

We’ve had a few internal “religious debates” on this, so let’s make it practical.

8.1 Versus LangGraph (and other agent-graph libraries)

Similarities

- Graph-based orchestration model

- Event-driven, check-pointable flows

- Supports multi-agent workflows, tool calls, streaming

Agent Framework strengths (esp. for Microsoft shops)

- Deep, first-party support for Azure OpenAI, OpenAI, and Azure AI Foundry using the OpenAI-compatible Responses API.

- Official, supported packages in .NET and Python, with Learn docs and samples.

- A strong story for enterprise auth (managed identities, Azure AD) and integration with broader Azure ecosystem.

If you’re already “all-in” on Azure and .NET, Agent Framework is the path of least resistance.

8.2 Versus LangChain

LangChain is fantastic for:

- Rapid prototyping

- Tool abstractions

- Many RAG patterns and vector DB integrations

But its control flow can still drift into code-spaghetti if you’re not disciplined.

Agent Framework’s Workflows are much more opinionated about:

- Graph structure

- Events and checkpoints

- Long-running, resumable processes

A common pattern we’ve seen:

Use LangChain (or similar) for data prep, document loaders, some RAG plumbing — then orchestrate the system using Agent Framework workflows & agents.

8.3 Versus Semantic Kernel

Microsoft has been clear in talks and labs that Agent Framework is the recommended stack for new agentic apps, especially those using the Responses API and Azure AI Agent services.

That does not mean Semantic Kernel is dead.

We like this positioning:

- Use Agent Framework for:

- High-level agent interfaces

- Workflows / orchestrations / multi-step processes

- Integration with Azure OpenAI/Foundry Responses agents

- Use Semantic Kernel where it’s still strong:

- Plugins (wrapping APIs, tools)

- Some memory and data-plane helpers

- Legacy apps already invested in SK

9. Implementation checklist & gotchas

Here’s the “sticky note on the monitor” version for your team.

9.1 API naming cheatsheet

Python

- Client:

from agent_framework.azure import AzureOpenAIResponsesClient

- Create agent:

agent = await client.create_agent(...)

- Run:

await agent.run(...)

- Streaming:

async for update in agent.run_stream("..."): ...

- Workflows as agents:

workflow_agent = workflow.as_agent(name="...")async for update in workflow_agent.run_streaming(payload): ...

.NET

- Packages:

Microsoft.Agents.AI.AzureOpenAIMicrosoft.Agents.AI.OpenAIMicrosoft.Agents.AI.Workflows.*

- Create agent:

var agent = await client.CreateAgentAsync(...);

- Run:

await agent.RunAsync(input);

- Streaming:

await foreach (var update in agent.RunStreamingAsync(input)) { ... }

- Workflows as agents:

var workflowAgent = workflow.AsAgent(name: "...");

If you see Microsoft.Agent.* (singular) or bespoke method names in docs/blogs, double-check the publish date — a lot changed as the framework moved into public preview.

9.2 Security & secrets

- Prefer Azure AD creds (managed identity,

AzureCliCredential) over hard-coded API keys. - If you must use keys, keep them in environment variables or secure config:

client = AzureOpenAIResponsesClient(

endpoint=os.environ["AZURE_OPENAI_ENDPOINT"],

api_key=os.environ["AZURE_OPENAI_API_KEY"],

)

- Be explicit about tool safety:

- Use allowlists for outbound HTTP tools.

- Never let “run shell” be a generic tool in a multi-tenant environment.

- The framework is not a sandbox.

9.3 Threading & scaling

- Do not share workflow or executor instances across concurrent executions.

- It’s fine to run many workflows in parallel; just treat each as an isolated unit.

- For long-running flows, lean on checkpoints and external data stores instead of in-memory globals.

9.4 Versioning

- In posts and internal docs:

- Say “latest preview of

Microsoft.Agents.*/agent-framework-*packages”. - Link to:

- NuGet profile: MicrosoftAgentFramework

- PyPI package page for

agent-framework

- Say “latest preview of

- Only pin exact versions in your own repo (e.g.,

requirements.txt,.csproj), not in prose.

10. Where we’d take this next

If we were in your shoes building production agents on top of Agent Framework, our roadmap would look like this:

- V0 – Single Agent with Responses

- One agent per use case

- No workflows yet

- Evals over typical user journeys

- V1 – Workflows for multi-step journeys

- Express key processes (onboarding, incident handling, approvals) as Workflows

- Turn them into agents and expose them via your API/backend

- Add basic observability via events → logs/traces

- V2 – Multi-tenant & resilient

- Per-tenant configuration + policies

- Proper checkpointing / resuming for long-running flows

- Strong evals around cost/latency/error modes

- V3 – Hybrid ecosystem

- Combine LangChain/LangGraph/SK pieces where they shine

- Use Agent Framework as the “orchestration backbone”

- Iterate agents using eval-driven development: tests vs. regressions, not vibes

— Cohorte Team

November 24, 2025.