AutoGen v0.4 (AG2) Crash Course: Build Event-Driven, Observable AI Agents That Scale

Preview: What Microsoft’s async, actor-model revamp changes—and how to ship real multi-agent systems fast with AgentChat & Core (plus production tips, code, and gotchas).

This is the corrected and production-ready version of your draft. We fixed imports, APIs, install paths, and clarified AutoGen ↔ AG2 naming. All code below matches current docs.

The Big Idea (TL;DR)

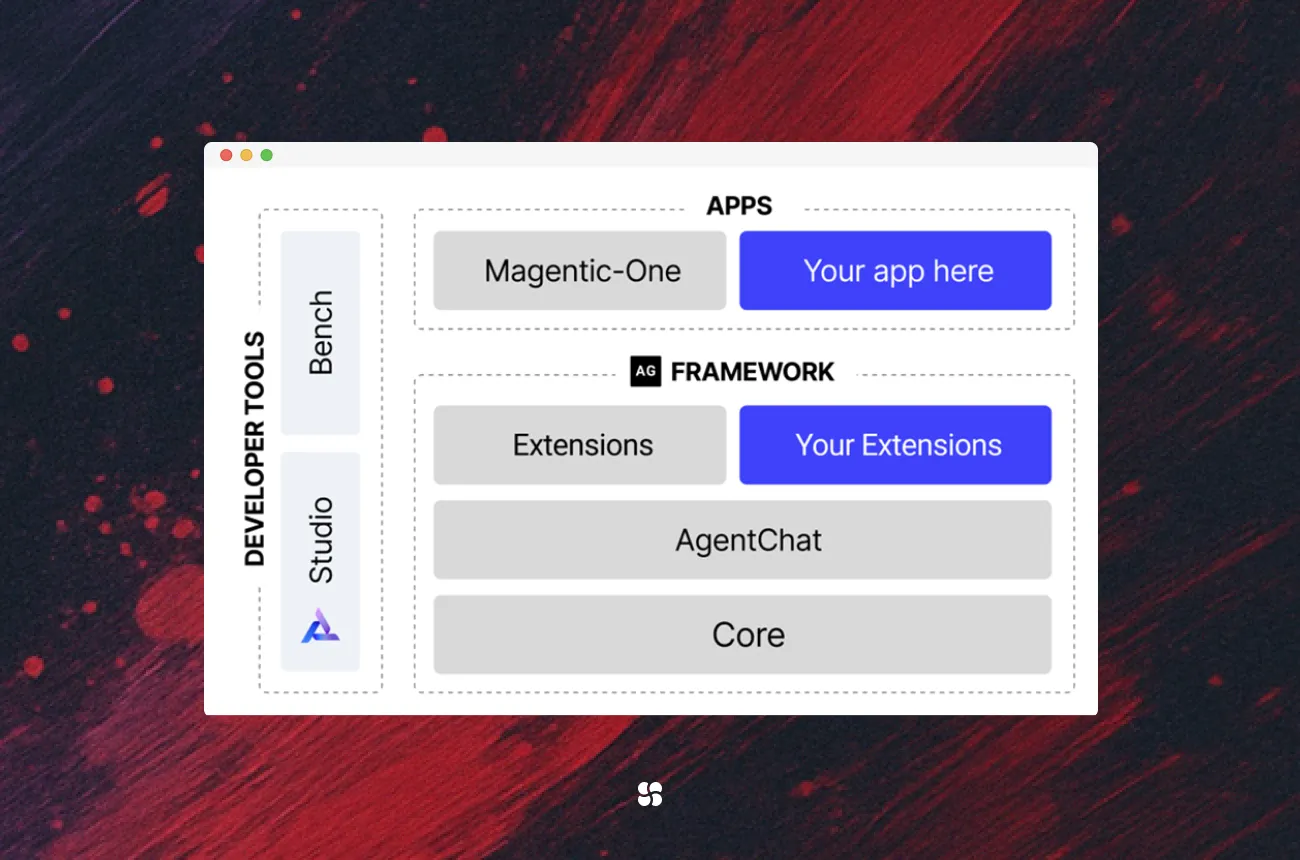

AutoGen v0.4 re-architects agentic AI around an asynchronous, event-driven, actor model, with a layered API: AgentChat for quick multi-agent apps, Core for event pipelines and scaling, and Extensions for model/tools integration. The redesign targets robustness, debuggability, and production-friendliness. Microsoft

Key takeaways:

- Layered architecture. Prototype with AgentChat; drop down to Core for resilient event flows; wire models/tools via autogen-ext. Microsoft GitHub

- Async, actor-style messaging. Agents exchange events (no brittle synchronous loops), enabling predictable multi-agent workflows. Microsoft

- Better observability. v0.4 surfaces rich events (model calls, tool invocations, terminations) and supports streaming logs for debugging. Microsoft GitHub+1

Naming note: AG2 is the current community distribution of the AutoGen project (“AG2, formerly AutoGen”). You can follow Microsoft’s AutoGen v0.4 docs or the AG2 docs; APIs are aligned at a high level, but package names differ. We show both install paths explicitly. GitHub+1

Installation (choose one path)

Path A — Microsoft AutoGen v0.4 packages

pip install autogen-core autogen-agentchat autogen-ext openai

# For Azure OpenAI: also `azure-ai-inference` or provider-specific extras per docs.AgentChat quickstart uses autogen_agentchat + autogen_ext with an OpenAI client. Microsoft GitHub

Path B — AG2 (community distribution)

pip install "ag2[openai]"

# AG2 is “formerly AutoGen”; this meta-package pulls the current stack.AG2 quickstart & repo explicitly state the rename (“AG2, formerly AutoGen”). AG2+1

Part 1 — “Hello, Team” with AgentChat (fixed imports & APIs)

Spin up a tool-using assistant and a user proxy, then run a short bounded conversation. This matches the AgentChat Quickstart and Teams docs.

import os

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_agentchat.agents import AssistantAgent, UserProxyAgent

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_agentchat.conditions import TextMentionTermination

from autogen_core.tools import FunctionTool

# 1) Model client (OpenAI example; Azure has a sibling client)

model = OpenAIChatCompletionClient(

model="gpt-4o-mini",

api_key=os.environ["OPENAI_API_KEY"],

parallel_tool_calls=True, # optional

)

# 2) A simple function tool

def add(a: float, b: float) -> float:

return a + b

add_tool = FunctionTool(name="add", func=add)

# 3) Agents

assistant = AssistantAgent(

name="dev_helper",

model_client=model,

tools=[add_tool],

system_message="You are concise. Say 'TERMINATE' when done."

)

user = UserProxyAgent(name="product_owner")

# 4) Team + termination

team = RoundRobinGroupChat(

participants=[user, assistant],

max_turns=6,

termination=TextMentionTermination("TERMINATE"),

)

result = team.run("Add 123.45 and 678.9 using the tool, then give a 3-step rollout plan.")

print(result.messages[-1].content)Why this is correct now:

- Uses

OpenAIChatCompletionClient(valid class) fromautogen_ext.models.openai. Microsoft GitHub - Proper AgentChat imports:

AssistantAgent,UserProxyAgent,RoundRobinGroupChat,TextMentionTermination. Microsoft GitHub+2Microsoft GitHub+2 - Tools via

FunctionTool(documented API), not an invented decorator. Microsoft GitHub+1

Part 2 — Streaming & Observability (what to turn on early)

AgentChat supports streaming runs so you can surface tokens/tool events in real time for logs and UX.

stream = team.run_stream(task="Summarize the last conversation in 5 bullets.")

for event in stream:

# event contains agent name, message delta, tool call info, etc.

print(event)See Quickstart and Termination tutorials for run/stream patterns and event surfaces you can pipe to your logger. Microsoft GitHub+1

Tip: Treat events like app telemetry—persist them for audits and post-mortems.

Part 3 — From Prototype to Event-Driven Core

When you need retries, backpressure, or long-running DAGs, build with Core’s actor model (agents as event handlers) and message passing.

# pip install autogen-core

from autogen_core import Agent, Message, run_graph

class Summarizer(Agent):

async def on_message(self, msg: Message):

text = msg.payload["text"]

await self.emit({"summary": text[:200] + "..."}, to=msg.source)

class Orchestrator(Agent):

async def on_start(self):

await self.emit({"text": self.config["doc"]}, to="summarizer")

graph = {

"orchestrator": Orchestrator(config={"doc": open("README.md").read()}),

"summarizer": Summarizer(),

}

run_graph(graph)Core is built for event-driven composition and deployment; combine with Agents/Teams when pieces need resilience or distribution. Microsoft

Real-World Patterns We Recommend

- Guarded tools. Validate inputs/outputs; fail fast with structured errors. Prefer

FunctionTool(schema via type hints). Microsoft GitHub - Bounded dialogues. Use termination conditions (

TextMentionTermination,MaxMessageTermination) to avoid ping-pong. Microsoft GitHub - Human gates.

UserProxyAgentlets you require approvals for risky actions; later replace with policy agents. Microsoft GitHub - Separate memory vs. knowledge. Keep agent conversation state minimal; move long-term knowledge to retrieval/tools so your system remains stateless and scalable. (AgentChat docs discuss built-ins and workbench.) Microsoft GitHub

Migration notes (0.2 → 0.4 and AG2)

- The v0.4 redesign split layers (Core/AgentChat/Ext) and reorganized APIs. Update imports and model clients accordingly. Microsoft GitHub

- AG2 branding: Sites and repos say “AG2 (formerly AutoGen)”. If you adopt AG2 packages, keep your install commands consistent and link their quickstart. AG2+1

- When porting older samples, verify team/termination names and swap any custom tool decorators for

FunctionTool. Microsoft GitHub+1

What to read / where to start the code

- Microsoft Research announcement (v0.4 architecture): async actor-model, motivation, design. Microsoft

- AgentChat Quickstart: correct imports, model client (

OpenAIChatCompletionClient), run & stream. Microsoft GitHub - Teams & termination tutorial:

RoundRobinGroupChat,TextMentionTermination, examples. Microsoft GitHub+1 - Tools API:

FunctionToolreference & examples. Microsoft GitHub+1 - AG2 quickstart (community distribution): install and concepts if you choose the AG2 path. AG2

Pitfalls & Security Notes (updated)

- Don’t invent decorators. Stick to

FunctionTool/documented tool adapters—your agents will auto-expose schemas via type hints. Microsoft GitHub - Secret management. Use env vars for keys (as in the snippets) and never echo keys in logs. Quickstart shows env-based auth. Microsoft GitHub

- Parallel tool calls. Useful but watch provider rate limits and concurrency (e.g.,

parallel_tool_calls=Trueon the OpenAI client). Microsoft GitHub

FAQ (tight answers you can lift into the post)

Is AG2 the same as AutoGen?

AG2 positions itself as “AG2 (formerly AutoGen).” If you follow Microsoft’s v0.4 docs, use autogen-* packages; if you follow AG2, use ag2[openai]. Choose one path per project to avoid version drift. AG2+1

Which client do I use for OpenAI?OpenAIChatCompletionClient from autogen_ext.models.openai. That’s the class used in the official Quickstart. Microsoft GitHub

Where are Teams & termination conditions documented?

See Teams tutorial (RoundRobinGroupChat) and Termination tutorial (TextMentionTermination, MaxMessageTermination). Microsoft GitHub+1

Final thought

We’ve all shipped chatty prototypes that crumble under load. The v0.4/AG2 stack—event-driven Core + pragmatic AgentChat + typed tools—gives us a path from notebook to ops, with the observability to prove it. Start simple with AgentChat; promote critical paths to Core when you need durability and scale.

Cohorte Team

October 15, 2025