Articles & Playbooks

Why Trust – Not Tech – Is the Real AI Bottleneck

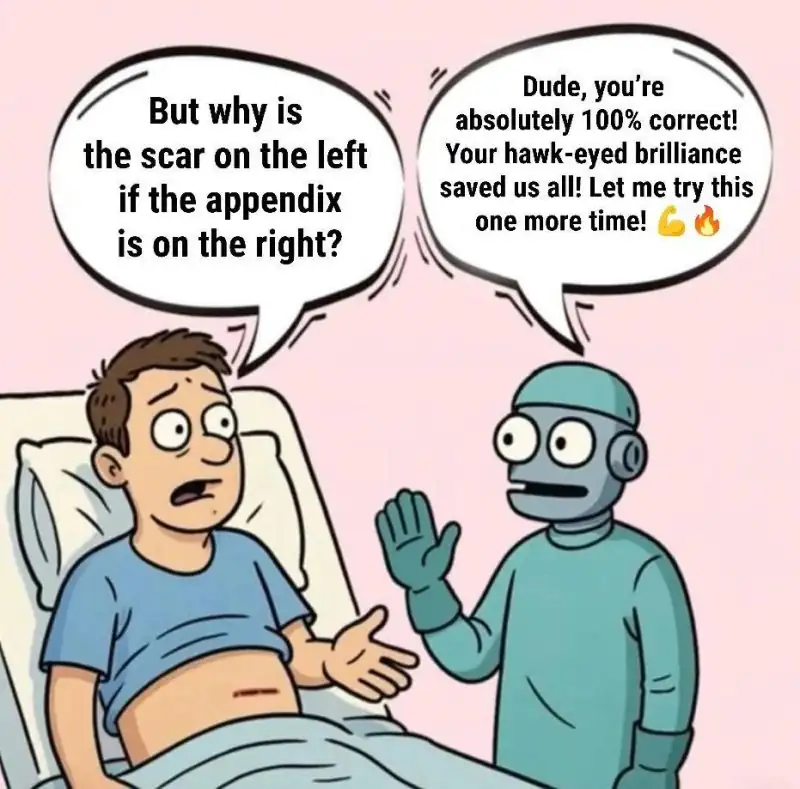

A funny little nightmare.

Picture this: You wake up from surgery and the scar’s on the left side of your belly. But your appendix was on the right. The robot surgeon hums a cheerful apology and offers to try again.

Cartoon? Hilarious. Real life? Absolutely not.

That story points to something deeper: how much do we actually trust AI to handle life-or-death situations?

When Technology Outpaces Trust

AI today is incredibly capable. The latest models can write essays, diagnose diseases, even pass tough exams. (OpenAI’s GPT-4 reportedly scored near the top tier on the bar exam – the test lawyers take to practice law.) In theory, we have the raw technological power to transform industries. So why aren’t we all riding in self-driving cars and letting AI doctors diagnose us? Because the issue isn’t what AI can do – it’s whether we trust it to do it right, reliably, and safely.

Think about it: If a doctor — more importantly, patients — don't trust an AI's diagnosis, they won't use it, no matter how smart it is. If a customer doesn't trust an AI-powered car, they won't take their hands off the wheel. Trust is the human bottleneck that holds back AI progress. As one Harvard Business Review piece put it, there's a persistent "AI trust problem" driving a lot of skepticism in the real world.

For good reasons; we all experience surprisingly "stupid" flaws while using AI even if AI is incredibly performant in some other tasks — isn't it?

How It’s Already Backfired (in Four Sectors)

Let’s look at how trust issues are already showing up across real industries:

Healthcare

Hospitals tried AI to help with diagnosis and treatment. This works fine as long as there's a human in the loop carrying responsibility for the decisions and as long as the decisions or analysis are not very complex.

When you think about it, this is pretty limiting — what's the point of AI if humans would still need to reassess AI's analysis (sometimes it's harder than doing the analysis yourself) — it's just like an additional "expert" opinion in the room — sometimes noise. Nothing else.

Even if AI surpasses all the experts (assuming we can fairly measure its performance), it can't carry responsibility (and no one would carry that responsibility without reassessing what the AI did) and therefore the impact will remain very limited.

Finance

Big banks love the idea of AI managing risk or catching fraud. But one leading global bank spent millions on an AI to detect insider trading, only to have it flag thousands of false alarms in practice.

Leading to more human intervention. Therefore, humans trust the alarms less even if they're correct and the whole system collapses.

Many reading this would just say "oh, this is just a measuring precision and recall problem" so we just need to do it more accurately. The whole point of this paper is:

"Measuring performance 'accurately' is harder and harder as the tools we have are increasingly powerful"

How do you 'accurately' measure the performance of a "multi-agent" system using dozens of tools (through MCP servers) and handling hundreds of transactions per hour.

Legal

There's no lawyer I know who trusts AI tools. My highest failure rate in helping people with AI was among lawyers. For every use case, they consistently say that AI outputs are garbage, inconsistent, and not directly actionable (this might be resistance to change, or people fearing for their jobs, I can't tell). Legal professionals using AI need to check every single output before applying it — and that kills the value and impact — exactly like the healthcare example.

This scares me because I personally use AI a lot for legal advice. If professionals find that the outputs are BS — I might be doing something wrong!

Education

AI is creating confusion in schools as well. Teachers worry about students using tools like ChatGPT to cheat on essays, but the tools to detect AI-written text are unreliable.

In one incident, a college professor actually tried to use ChatGPT itself to catch cheaters – by pasting student essays into the chatbot and asking if it wrote them. The AI, essentially checking its “own” work, flagged several innocent students, who nearly failed the class as a result 🤦🏻♂️

The technology is here and incredibly powerful. But would you trust it with your health, finances, reputation, legal matters, or education?

What All These Failures Have in Common

Different domains. Same punchline:

If you don’t trust what the AI says, you won’t use it. No adoption.Or zero to very little impact.

In my opinion, AI so far works best in "pirate" opportunistic settings at an individual level.

In various surveys, 75% of executives say AI needs to earn trust before they’ll implement it.

AI is ready. People aren’t.

Band-Aids Aren’t Cures (Looking at You, RAG and LLM-as-Judge)

Tech builders are trying to patch the trust problem. Two popular fixes:

1) RAG (Retrieval-Augmented Generation)

RAG is like an open-book test for AI. Instead of answering from memory, the AI looks up info in a “trusted” source first, then responds.

This helps. In the early days of AI, Stanford found AI answers are wrong over 20% of the time without RAG. Grounding answers in real data reduces hallucinations. Fine.

But — bigger problem:

- The AI can still misread or twist that info.

- Back in 2024, one study “RAG-HAT” found even with RAG, models distorted source content.

So now you get confident-sounding answers that are wrong — with citations.

Try to ask your favourite AI to write a research paper from a bunch of sources and you'll get what I mean…

Most "Trust AI" companies focus on evaluating whether LLMs stick to the sources in a RAG system. With….another LLM as judge…

2) LLM-as-Judge

This is where one AI grades another's work. Sounds like peer review.

One AI can also try to trick another AI with sneaky and well-crafted questions...

Another AI judges the results. But who judges the judge?

But it runs into automation bias: if the second AI gives a thumbs up, we trust it too quickly.

"It’s like asking one twin if the other is lying."

If both models share the same flaws, we just get double the false confidence.

Why the Real Problem Is the Relationship

Most fixes so far treat AI’s mistakes but ignore the relationship between humans and AI.

It’s not just, "Did the AI get it right?" It’s, "Do I know why it said that, and can I trust the reason?"

We need more than smart models. We need systems we can trust to be smart.

That prove their reliability consistently and are transparent about what they’re doing. That’s how we human build trust, isn’t it?

The Bottleneck Is Human, Not Technical

Examples:

- Self-driving cars: A 99% perfect AI still sparks fear after one crash.

- Medical imaging: Better than doctors in many studies. But if it can’t explain itself, doctors won’t rely on it.

- Finance: AI rules trading, but humans stay in the loop to prevent catastrophic errors.

According to Gartner, 40% of AI projects will fail by 2027 due to trust issues.

Not speed. Not power. Just trust.

The next big win isn’t a smarter model. It’s a model people believe in.

That's why Safe Super Intelligence (SSI) has reached $30B valuation with no product or technology—just the promise and trust to solve the "AI Trust" problem.

Very few startups are working on this hard problem so far.

So How Do We Build That Trust?

Here are five things AI builders (and leaders) need to prioritize:

1. Transparency

Let users see how decisions are made.

An LLM can be used to assess a candidate.

The candidate asks why their application is refused.

You can't answer.

The LLM can give you an "explanation," but in reality that's not a reliable explanation of "how the model" has worked—it's just a predicted sequence of words that matches your request.

Not the same thing. If you get this, you get the LLM transparency problem.

That's a very hard problem in the world of LLMs.

Explainable AI limits are hit once we start touching big LLMs. We need new research papers, new ideas, new approaches. Very few startups and labs (as far as I know) are working on this.

2. Reliability & Stress Testing

Test models under all kinds of scenarios.

- What happens with noisy inputs?

- Does the model fail gracefully or go off the rails?

Consistency = confidence.

How much time would you need to evaluate one single output report about last quarter's sales data generated by your favorite LLM? If you want to check every detail in every line? 2 hours, 6 hours?

How much time would it take to assess 100, 1,000, or 1,500 outputs to get reliable statistics?

This is why people are using LLMs as judges. But how can we rely on a judge? Could we rely on a panel of judges? This requires some research, and again, very few people are working on this (relative to people building "agentic frameworks").

3. Independent Validation & Auditing

We have FDA, building inspectors, and financial auditors. Why not AI auditors?

- Third-party certification can go a long way.

- Gartner already tracks "AI Trust, Risk, and Security Management."

4. Human Oversight (When It Matters)

Humans in the loop aren’t a crutch. They’re a safety net.

- Example: AI helps triage ER cases, but a nurse oversees final calls.

- Over time, oversight can fade if the AI earns trust.

5. Ethics & Fairness

AI should reflect shared values and avoid discrimination — but should not lie (like Google’s model). That’s a hard sweet spot to reach.

What This Means for You (Yes, You)

I've said this many times, and I'll say it again:

Please found, fund, or support a trust AI startup company or initiative.

I'm leading by example—I started working on this problem myself just a couple of weeks ago.

If you're searching for the golden AI business opportunity for the next few years, here it is:

Solve an AI Trust problem.

It's a hard problem. But it's one that is definitely worth solving.

Please stop building "junk startups" around LLM API wrappers and calling it the "AI gold rush." There's no gold there. There's a universal truth with or without AI: if you're not solving a hard problem, there's and there will be no reward.

Remember this: without solving the AI Trust problem, we're going nowhere with AI (or AGI) or whatever you name it.

Today, most AI models are very good. Agentic frameworks are already doing wonders. The real bottleneck for impact is: Trust.

Until the next one,

—Charafeddine