Articles & Playbooks

Hey friends,

Today I want to talk about "Agentic AI," the new "spicy sauce" for investors and recruiters.

I know you're like me—flooded with information, posts, and podcasts explaining why "Agentic AI" will lead to the "end of history" and the end of human labor.

I think most of that is BS, and I wanted to write this short letter this week to explain why.

I know I’ve been beating this drum lately, but I need to say it again:

The “AI revolution” has hit a ceiling.

Not because the tech isn’t advancing—it absolutely is.

But for a much simpler reason:

TRUST.

Would you hand over your reputation, money, company, or health to an army of AI agents?

Didn’t think so.

You’d double-check, right?

Exactly.

So… where’s the impact if you double-check?

The only way Agentic AI delivers real value is by solving one of these two problems:

a) The system becomes trustable

→ Large-scale evaluation of performance — not “ran it 20 times, looks good enough.”

b) The system becomes transparent

→ Clear, explainable relationships between inputs and outputs.

I wrote about this last week:

Trust—not tech—is the new bottleneck.

If it takes 1,000 agents to replace one employee…

…and 1,000 humans to check or clean up the mess…

The math doesn’t work.

And the more I dive into client problems, AI projects, and deep research, the same truth keeps surfacing:

The real bottleneck for AI impact isn’t technology. It’s trust.

So next time someone says AI agents will “revolutionize” work, ask them this:

1) Do these agents actually replace YOUR work? Why NOT?

2) And if “Agentic AI” is saving you ‘dozens of hours a week,’ how much time are you spending double-checking the agents’ output?

Because here’s the reality: if nobody’s verifying the work, it’s probably junk work.

And when it comes to things that really matter—your money, your rep, your product, your operations—

No smart person or company is handing over full control to a swarm of bots.

The risk is just too high. You know it. I know it.

Even Google’s CEO admitted on Lex Fridman’s podcast that AI’s impact on their org is ~10%.

Not 30%. Not 50% (like some big consulting firm claims).

And this is Google.

I tried it myself a quarters ago—dreaming of full operations automation for my company.

It flopped. Hard. Most tasks failed.

Without humans in the loop? No accountability. No trust.

Just bots doing enough dumb things to make you look like a clown in front of a client—or worse, torch your cash on bad decisions.

Now, don’t get me wrong—AI does have tremendous impact.

But it shines as a co-worker, not a solo operator.

One-on-one, with a human who understands the task, challenges the output, and takes ownership?

Game-changer.

Anything else?

Just junk work.

Sorry folks, the “Agentic AI is going to change the world forever” line is not gonna happen.

Let’s break this down with a few facts:

The Temptation of a 24/7 Bot Army

Why the hype keeps coming back:

*By the way, I couldn't find the real source of the 55% and 35% cost savings claims by Superhuman…

I'm sure your feed is flooded like mine with "Huge" claims about agents. Reality is a whole different story.

If you have an example of ONE agent truly automating human work "end-to-end," please send it—I will share it with the whole community. So far, while working with numerous clients in Europe and the US, I couldn't find one example delivering substantial value, except "junk" social media claims of "I'm giving away my n8n 50k worth workflow"...

Some might argue, “Yeah, we can’t do it so far, but what about GPT5 or 6 (or AGI)-powered AI Agents in a couple of year?”. My answers is simple: Would you trust it with your money? And for an organization, who carries responsibility?

As far as I'm concerned (while I believed this was possible once), the 24/7 fully autonomous and client-facing bots with AGENCY (not answering questions) is not the new normal.

We might have a couple of them in some segments where people would take the risks, but I hardly believe the army of agent organizations (with no humans) is the future.

UNLESS WE SOLVE THE TRUST PROBLEM ↓

The Trust Gap—Where Good Tech Goes to Die

1. Black-Box Decisions

You ask, it answers, you stare at the ceiling, wondering “But…why?”

Compliance can’t approve what it can’t explain.

2. Quality Assessment at Scale

Today we run the agent a dozen times and say "looks good enough!"

What about all the edge cases and the 3%, 6%, or 20% cases where the agents don't work properly or just make you look like a clown?

Re-checking the work of an agent taking 4 actions on 3 databases and producing a document of 4 pages is very, very costly.

Trust or reputation takes time to be built and can be ruined in 2 seconds… Would you take the gamble?

3. Hallucinations & Errors

The bot sounds confident, cites data that doesn’t exist, and you’re left apologizing—again.

We know what hallucinations can do at the scale of one bot. Now what about an Agent (with multiple tools and LLM calls) or an “army of agents”?

4. Who Takes the Blame?

Fire the engineer? Sue the vendor? Blame the intern? Ambiguity scares CEOs more than bugs.

Check this out:

5. Data Security Nightmares

80 % of highly automated firms list privacy leaks as their #1 fear. Understandable—no one wants an AI forwarding payroll spreadsheets to an ex-employee “just in case.”

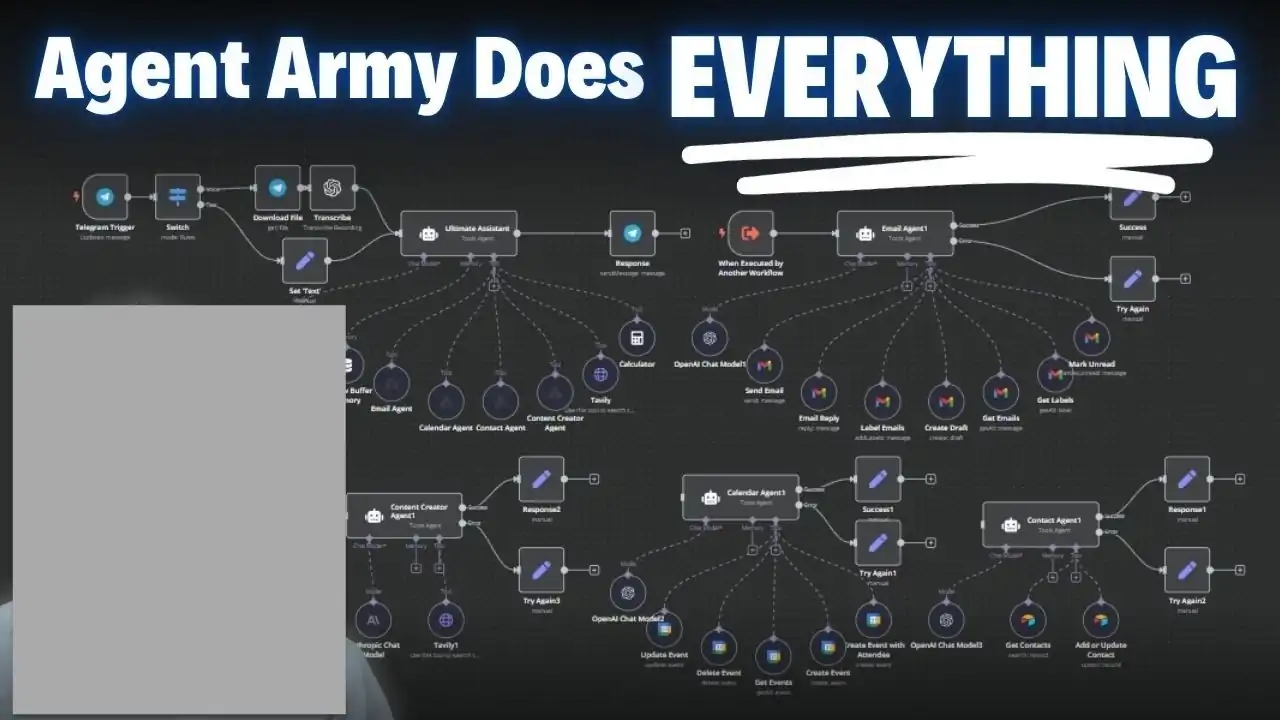

The “Spaghetti Workflow” Nightmare

The trust problem is even worse when your “clever army of agents” looks like a spaghetti mess of 100 connected items in n8n — understandable only by the creator, and even then, only before J+10 😵💫

Picture 100+ connected agents like this in your organization, each calling APIs, updating databases, and emailing clients—sometimes in circles.

- Cascading errors: One faulty output snowballs across the system like dominoes on ice.

- Hidden states: Agents “remember” old context, then act weird months later.

- Maintenance hell: The original builder leaves, and now your ops team is excavating logs like archaeologists.

Result: mission-critical workflows feel like a Jenga tower—impressive until someone sneezes.

Again, this can have a tremendous impact for "low stakes" individual tasks, like handling your emails, producing drafts for you, prototypes, research drafts, synthesis, or starters for your projects.

BUT IT SIMPLY DOES NOT SCALE.

Trust Is the New Bottleneck (Not GPU Shortage)

We’ve largely cracked the algorithms; now we have to crack human confidence. Two fixes rise to the top:

A. Flip on the Lights (Transparency)

- Explain-your-work mode: Agents leave breadcrumb trails—“Step 1: checked purchase date…Step 3: refund within policy.”

- Human-in-the-loop: Bots draft; people approve. Training wheels stay on until the crash rate hits ~0.

- Hard role boundaries: Your “data analyst” agent can’t suddenly email customers a coupon. Guardrails calm nerves.

B. Prove It (Rigorous Testing)

- Stress-test like a crash-dummy: Thousands of edge-case runs, red-team attacks, worst-case scenarios.

- New benchmarks: Consistency, resilience, coordination—beyond plain accuracy.

- Track records, not demos: “Handled 10,000 tickets with 99.5 % success, zero breaches” beats any keynote hype.

Consider the scale of this task:

"Re-checking the work of an agent taking 4 actions on 3 databases and producing a document of 4 pages is very, very costly."

This creates a paradox where we must delegate testing to other AI systems, resulting in a recursive problem: who tests the tester? Who validates the LLM judge?

This recursive verification challenge is what makes this such a difficult problem to solve.

Otherwise, humans verify the work, and therefore there's no point in using the agent.

Humans Still Matter (Maybe More Than Ever)

Right now, the expensive part isn’t doing the task—it’s verifying the task.

- AI content moderators, AI auditors, “AI Quality engineers”—none of these jobs exist today.

- Winning setups = AI + experienced human. The bot prepares the draft; we bring nuance, ethics, and “Wait, that looks wrong” instincts.

Think of agents as over-eager interns:

Bot: “I already emailed the client!”

You: “Great…wait, which client?”

Bot: “Uh…all of them?”

You: facepalm

They’re useful—but only with a responsible adult in the room.

Quick Recap—Five Takeaways You Can Use Monday

- The sweet spot in using AI is you, who knows what you're doing, collaborating with AI to make things YOU imagine exist faster.

- Autonomy sounds cool; accountability pays the bills.

- Transparency beats raw IQ. If you can't explain it, you can't ship it.

- Test until bored. Trust grows from monotonous, repeatable success. It's a hard problem. We need to use our human neurons to solve this problem.

- AI won't replace people; it will replace un-augmented people. Become the human who knows how to manage the bots.

Closing Thought

We’ve built a race-car engine. But if the driver doesn’t trust the brakes, the car stays in the garage. Solving transparency and trust is our next step, not building GPT-6 or the next super “agent orchestrator”.

Until then, keep a hand on the eject lever—and maybe a pot of coffee for those 3 a.m. damage-control shifts.

Trust is golden. We’re still mining it.

All the best.

— Charafeddine